Facebook post found this week, labelled June 25 at 16:46

HELL AND EARTH “Do your research!!!”

Here’s the thing. Research is a learned skill; it is hard, it is nuanced and complex, and it is true that the majority of people would not even know where to begin or even HOW to do [their own] research.

Research is NOT:

Googling, scrolling your FB newsfeed, or watching YouTube or 4Chan to search for the results you are hoping to find to be “true.” These are called confirmation biases, and are quickly and easily ruled out when doing actual research.

A post credited to Linda Gamble Spadaro, a licensed mental health counselor in Florida, sums this up quite well:“Please stop saying you researched it. You didn’t research anything and it is highly probable you don’t know how to do so.

Did you compile a literature review and write abstracts on each article? Or better yet, did you collect a random sample of sources and perform independent probability statistics on the reported results? No?

Did you at least take each article one by one and look into the source (that would be the author, publisher and funder), then critique the writing for logical fallacies, cognitive distortions and plain inaccuracies?

Did you ask yourself why this source might publish these particular results? Did you follow the trail of references and apply the same source of scrutiny to them?

No? Then you didn’t…research anything. You read or watched a video, most likely with little or no objectivity. You came across something in your algorithm manipulated feed, something that jived with your implicit biases and served your confirmation bias, and subconsciously applied your emotional filters and called it proof.”

This doesn’t even go into institutional review boards (IRB’s), also known as independent ethics committees, ethical review boards, or touch on peer review, or meta-analyses.

To sum it up, a healthy dose of skepticism is/can be a good thing…as long as we are also applying it to those things we wish/think to be true, and not just those things we choose to be skeptical towards, or in denial of.

Most importantly, though, is to apply our best critical thinking skills to ensure we are doing our best to suss out the facts from the fiction, the myths, and outright BS in pseudoscience and politics.

Misinformation is being used as a tool of war and to undermine our public health, and it is up to each of us to fight against it.

I've not edited this non-British English. When I copied this from the Facebook thread as sent to me by an ex-colleague, it took a long time for the material to transfer. The reason for this was that a large amount more was copied across, easily the last six screenfuls I'd looked at, perhaps a lot more more. I guess the link or hook for all the extra was in front of the first visible character at the head of what was copied. It makes me wonder just how much of what one has been doing is available, and especially to someone else, i.e., someone able to look at what I've been doing. That's too much like having CCTV in the toilet.

Oh. Okay, we use the word 'research' to mean different things. One wonders how many of these attributes is required for some investigation to qualify as 'research'? Perhaps it matters who the audience is? If you prefer, maybe it matters in what context this 'research' occurs.

I will agree very readily and happily that any, even perhaps every, comment or opinion you take on board should be treated with scepticism as to the 'evidence' claimed. I agree that all such evidence should be inspected for bias and misinterpretation. I am not at all happy with published work (meant to be read by many not known to the author) that does not indicate sources.

On the other hand I feel that a 'literature review' is inherently incomplete and anyway is reflection of the weight of opinion to date rather than a measure of accuracy; opinion not fact. Further, that a literature review becomes of value if there is disagreement, because then the review can have merit in attempting to explain how conflicting authors might both (or all) be correct. I see no benefit in a review of what is already consensus agreement.

As for 'a random sample of sources' I wonder immediately if the meaning of 'source' just moved: to sample at random implies that there is access in some way to a large, in some way 'complete' body of the available material. Sorry, that has to be biased and it might well be difficult to establish whether those biases are relevant. As for complete, I am very unclear how one could tell what 'complete' might mean, so that 'material' itself becomes some subset such as 'published academic material within the sources I chose as being sufficiently available'. Many, indeed most of which are closed to me since I am not employed by a university.

Review, etc means the so-called 'peer review'. This of course is as open to abuse as any other review process, in which people (with opinions they feel the need to defend for any reason) have negative reactions to any and all opinions that put their own position in jeopardy, real or imagined. What we need is disinterested peer review, that measures the processes themselves, not the conclusions reached. For rather more research than I'd like to have read, we can automatically question the use of statistics almost to the same extent that economists differ in opinions. In reaction, we tend to use statistical tools in 'standard' ways, irrespective of the aptness of those tools. Partly this is because such behaviour is expected, even that the work can be seen as not-right until such processes have been done and I have long suspected that a many such tools are applied because of rote rather than reason. Indeed, if it were me, I'd do what was seen as 'expected' and then comment on applicability, though the academic mathematician in me feels that the inapplicable work should not be done at all.

The comments on bias— ask yourself why this source might publish these particular results—I agree with completely. It is unconscious bias that is hardest to eliminate. But equally, known bias can cause one to reject conclusions without reading them, such that the bias is perceived as so strong it doesn't matter at all what comes from such a body, you're going to reject it. Therefore there is no point in even bothering to read it. I am ambivalent here, since I do both the rejection and the reading. Reading work from a source of known bias becomes a study (research, even) into how data might be collected and then presented with the bias invisible. As such, I see biased research (sorry, I mean research from sources where bias is presumed) as offering case studies in how to present work so that the desired outcome results. This applies to any vested interest funding research. Unfortunately, it is very difficult to believe any body of any sort that says they want the research to be unbiased when quite clearly they have (in)vested interest in the result, That is also when very obviously there is going to be a binary result, one of which produces gain and one not. Such as showing that smoking is not bad for you, or showing that climate change is not happening, or that <this process> is or is not adding to climate change. We go further with the indirect stab, say by showing that <this process, adjacent but not the same as a vested interest> is/isnot bad for the environment, with the subtext that therefore the vested interest is either the same or subtly different and so is/is not painted in the same good or bad light.

20210820 DJS

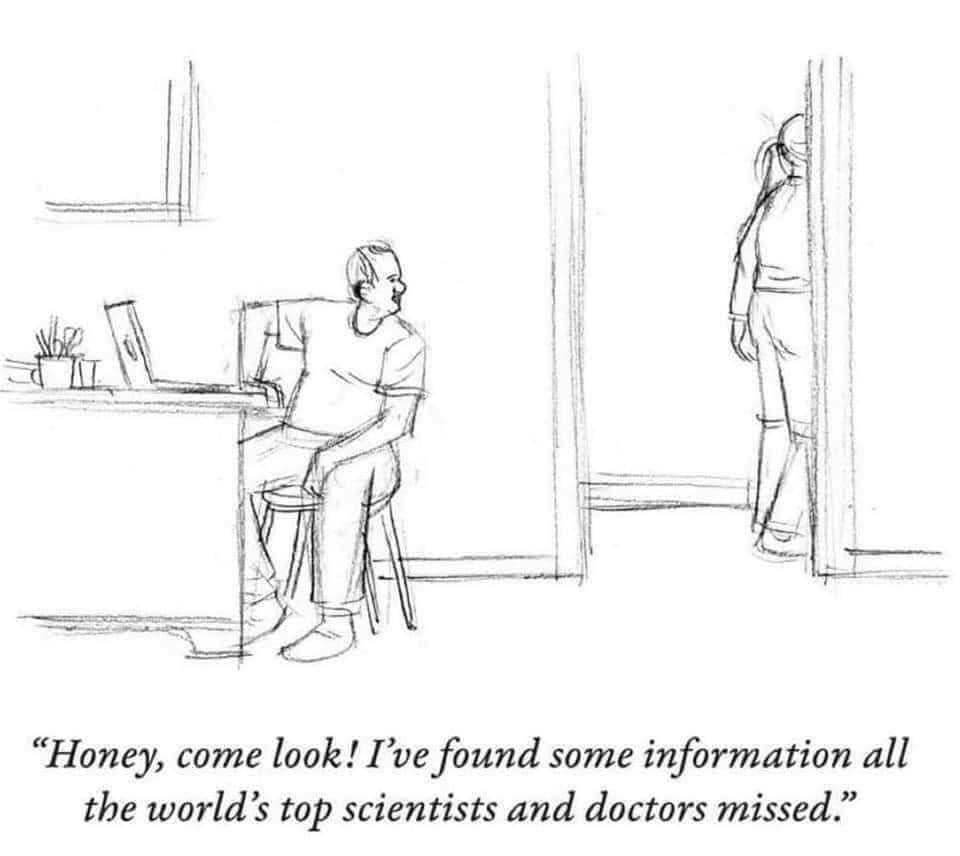

top pic shared on FB from Science: evidence is intelligence 20210907 07:00